Robots in factories are really good at picking up objects they’ve been pre-programmed to handle, but it’s a different story when new objects are thrown into the mix. To overcome this frustrating inflexibility, a team of researchers from MIT devised a system that essentially teaches robots how to assess unfamiliar objects for themselves.

As it stands, engineers have basically two options when it comes to developing grasping robots: task-specific learning and generalized grasping algorithms. As the name implies, task-specific learning is connected to a particular job (e.g. pick up this bolt and screw it into that part) and isn’t typically generalizable to other tasks. General grasping, on the other hand, allows robots to handle objects of varying shapes and sizes, but at the cost of being unable to perform more complicated and nuanced tasks.

Today’s robotic grasping systems are thus either too specific or too basic. If we’re ever going to develop robots that can clean out a garage or sort through a cluttered kitchen, we’re going to need machines that can teach themselves about the world and all the stuff that’s in it. But in order for robots to acquire these skills, they have to think more like humans. A new system developed by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) takes us one step closer to that goal.

It’s called Dense Object Nets, or DON. This neural network generates an internal impression, or visual roadmap, of an object following a brief visual inspection (typically around 20 minutes). This allows the robot to acquire a sense of an object’s shape. Armed with this visual roadmap, the robot can then go about the task of picking up ther object—despite never having seen it before. The researchers, led by Peter Florence, will present this research next month at the Conference on Robot Learning in Zürich, Switzerland, but for now you can check out their paper on the arXiv preprint server.

During the learning phase, DON views an object from multiple angles. It recognizes specific spots, or points, on the object, and maps all of an object’s points to form an overall coordinate system (i.e. the visual roadmap). By mapping these points together, the robot gets a 3D impression of the object. Importantly, DON isn’t pre-trained with labeled datasets, and it’s able to build its visual roadmap one object at a time without any human help. The researchers refer to this as “self-supervised” learning.

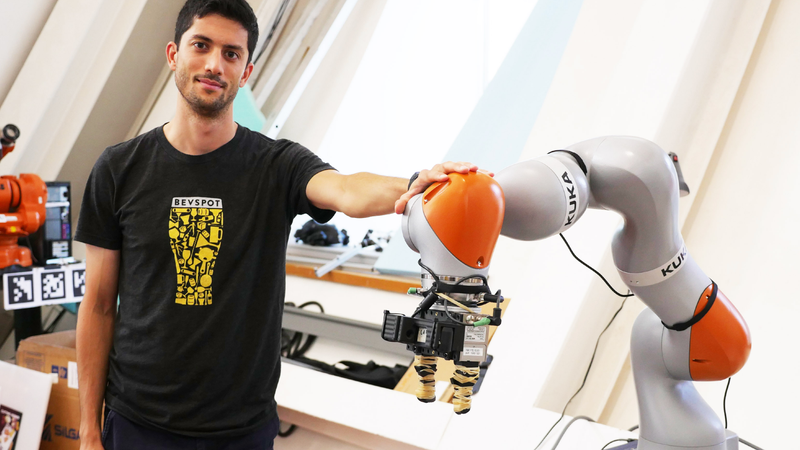

Once training is complete, a human operator can point to a specific spot on a computer screen, which tells the robot where it’s supposed to grasp onto the object. In tests, for example, a Kuka IIWA LRB robot arm lifted a shoe by its tongue and a stuffed animal by its ear. DON is also capable of classifying objects by type (e.g. shoes, mugs, hats), and can even discern specific instances within a class of objects (e.g. discerning a brown shoe from a red shoe).

In the future, a more sophisticated version of DON could be used in a variety of settings, such as collecting and sorting objects at warehouses, working in dangerous settings, and performing odd clean-up tasks in homes and offices. Looking ahead, the researchers would like to refine the system such that it’ll know where to grasp onto an object without human intervention.

Researchers have been working on computer vision for the better part of four decades, but this new approach, in which a neural net teaches itself to understand the 3D shape of an object, seems particularly fruitful. Sometimes, the best approach is make machines think like humans.

No comments:

Post a Comment