Những người dùng Internet tại Việt Nam thường lấy “chị Google” ra để… giải trí. Khi “chị” đọc văn bản hay chỉ đường cho người tham gia giao thông, chất giọng của “chị” luôn khiến cho người ta thấy muốn cười. Thế nhưng, nếu dùng AI của Viettel, người nghe sẽ thấy khác…

Giọng đọc “như người thật” trên báo điện tử

Công nghệ chuyển văn bản thành giọng nói (text to speech) được ứng dụng vào nhiều lĩnh vực của cuộc sống, trong đó có báo nói. Loại hình này ra đời, đem lại cách tiếp cận thông tin mới cho độc giả nhưng bị đánh giá là “không khác gì chị Google”.

Cho đến khi báo điện tử Dân trí và nhiều báo điện tử khác phát triển báo nói từ công nghệ trí tuệ nhân tạo (AI) do Tập đoàn Viettel cung cấp, độc giả đã thực sự bị bất ngờ với chất giọng truyền cảm, mượt mà vang lên từ chiếc máy tính. Không những vậy, họ còn có thể chọn tông giọng mong muốn để cảm thấy gần gũi với địa phương của mình hơn như giọng nam, giọng nữ, giọng miền bắc, giọng miền nam.

“Công nghệ AI của Viettel phát triển dựa trên dữ liệu tổng hợp tiếng nói, tạo ra giọng đọc máy nhưng tự nhiên như giọng người. Chúng tôi đang phát triển giọng máy ngày càng đa dạng hơn. Ngoài giọng đọc theo giới tính và vùng miền, còn có giọng đọc theo độ tuổi như trẻ em, người già, thanh niên…” – Anh Nguyễn Hoàng Hưng, Phó trưởng phòng Khoa học Công nghệ, Trung tâm Không gian mạng của Viettel cho biết.

Sản phẩm này được đánh giá là một trong những công nghệ giọng đọc nhân tạo tốt nhất thế giới hiện nay. Công nghệ “text to speech” do Viettel phát triển có thể thay thế hoàn hảo cho phát thanh viên và người ghi âm chuyên nghiệp trong các công việc như đọc truyện, đọc báo, tổng đài trả lời thông tin tự động…

Nhưng đó chỉ là một ứng dụng rất nhỏ được phát triển từ công nghệ AI mà Viettel đã nghiên cứu và đưa vào thực tế. Trong lĩnh vực truyền thông - Marketing, Trung tâm không gian mạng đã tạo ra hệ thống Reputa có khả năng giám sát 100% các kênh trên không gian mạng, phát hiện, cảnh báo chính xác những điểm nóng dư luận và dấu hiệu khủng hoảng truyền thông của doanh nghiệp, các tổ chức và Chính phủ.

Social Listening không phải là một sản phẩm xa lạ đối với các doanh nghiệp, mà thậm chí trong thời đại ngày nay, khi mạng xã hội phát triển mạnh mẽ thì hầu hết các doanh nghiệp, tổ chức lớn đều sử dụng để kịp thời nhận diện khủng hoảng truyền thông. Nhưng Reputa của Viettel là hệ thống đầu tiên tại Đông Nam Á có khả năng giám sát thông tin trên video và báo giấy.

Anh Nguyễn Hoàng Hưng cho biết, việc giám sát thông tin trên video được dựa trên công nghệ lõi là chuyển đổi âm thanh từ video thành văn bản (speech to text). Reputa kết hợp thêm công nghệ xử lý ngôn ngữ tự nhiên, nhờ đó có thể xác định được thái độ của người nói là tích cực hay tiêu cực. Như vậy, từ những từ khóa mà khách hàng mong muốn, Reputa có thể nhận dạng nội dung của đoạn video trên Internet, và quan trọng hơn cả, là nội dung đó có tiêu cực hay không.

Trong khi đó, hoạt động giám sát thông tin trên báo giấy dựa trên việc xử lý hình ảnh. Từ dữ liệu ảnh chụp hoặc scan trang báo, công nghệ chuyển đổi hình ảnh thành văn bản sẽ được sử dụng để hệ thống phát hiện những nội dung mà doanh nghiệp cần theo dõi.

… Và căn bệnh nguy hiểm tại Việt Nam

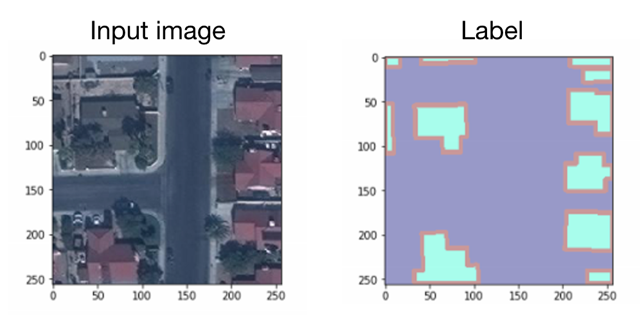

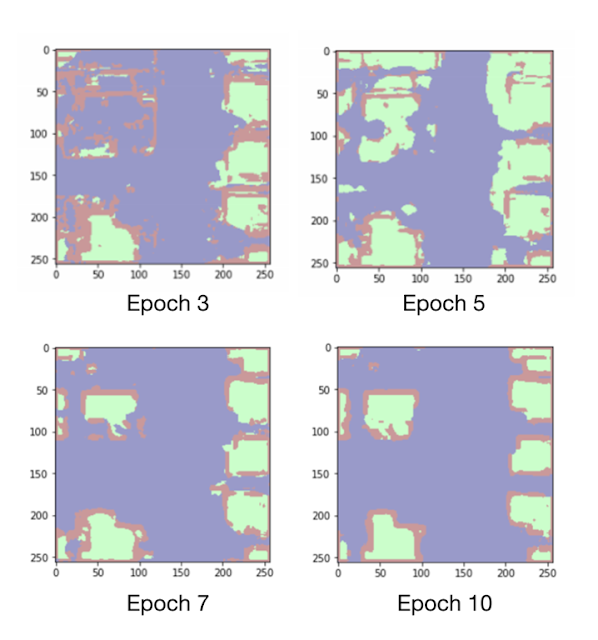

Khoảng hơn 1 tháng nay, tại một số bệnh viện, các bệnh nhân đến nội soi đường tiêu hóa không còn phải ngồi chờ cả tiếng đồng hồ để lấy kết quả như trước mà chỉ mất vài phút. Đó là nhờ Hệ thống hỗ trợ chẩn đoán ảnh nội soi của Viettel - Sản phẩm đầu tiên tại Việt Nam ứng dụng Trí tuệ nhân tạo vào lĩnh vực nội soi.

“Tất cả các hình ảnh chụp được sau khi nội soi sẽ được đưa vào hệ thống của Viettel, phân loại ảnh vào các vị trí của đường tiêu hóa. Sau đó, khoanh vùng những chỗ mà nó đánh giá là có dấu hiệu tổn thương, đánh giá mức độ tổn thương và cuối cùng là trả kết quả cho bác sỹ” – chị Đặng Quỳnh Anh, kỹ sư nghiên cứu thuật toán, Ban Nghiên cứu Ứng dụng Toán học (Viettel) cho biết.

Như vậy, thay vì phải mất nhiều thời gian để xem hình ảnh bằng mắt thường và đánh giá tổn thương bằng kinh nghiệm thì giờ đây các bác sỹ chỉ cần nhìn vào kết quả mà hệ thống đưa ra, kiểm tra lại và đưa ra phương án điều trị. Không chỉ tiết kiệm thời gian và sức lực cho các bác sỹ, sản phẩm AI này cũng làm tăng tỷ lệ chính xác khi xác định tổn thương, tránh bỏ sót những tổn thương ở giai đoạn mới chớm.

“Sau này hệ thống phát triển hơn, thậm chí sẽ không cần bác sỹ kiểm tra lại nữa mà có thể trả luôn kết quả cho bệnh nhân” – chị Quỳnh Anh khẳng định.

Bệnh dạ dày là một căn bệnh nghe có vẻ đơn giản nhưng thực tế, đó là bệnh phổ biến nhất ở Việt Nam, đồng thời ung thư dạ dày cũng là loại bệnh đứng thứ 3 về tỷ lệ tử vong. Theo con số thống kê, mỗi năm Việt Nam có khoảng trên 17.000 ca ung thư dạ dày mới mắc, số người tử vong khoảng 15.000 ca. Tuy nhiên, điều nguy hiểm của loại bệnh này là dấu hiệu phát bệnh ở giai đoạn đầu không rõ ràng nên hầu hết được phát hiện ra ở giai đoạn muộn.

Chính vì vậy, trong Y tế, Viettel lựa chọn phát triển công nghệ AI đầu tiên trong lĩnh vực nội soi đường tiêu hóa, nhằm hỗ trợ các bác sỹ phát hiện ra bệnh sớm hơn, tăng khả năng chữa bệnh. Khi được triển khai rộng rãi, giải pháp này của Viettel được kì vọng sẽ giúp thời gian trả kết quả chẩn đoán nhanh hơn gấp 5 lần, tăng tỉ lệ phát hiện ung thư dạ dày ở giai đoạn sớm lên gấp 3 lần so với trước đây, đồng thời hỗ trợ đào tạo bác sĩ trẻ. Nhờ vào ứng dụng Trí tuệ nhân tạo, trong tương lai, người dân sẽ được hưởng dịch vụ y tế chất lượng cao ở mọi nơi.

“Do hiện tại Viettel chỉ tiếp cận được nguồn dữ liệu ảnh nội soi đường tiêu hóa từ Viện Gan mật nên công nghệ AI mới chỉ dừng lại trong lĩnh vực này. Nhưng sau này, chắc chắn chúng tôi sẽ mở rộng ứng dụng sang các loại hình khác” – chị Quỳnh Anh tiết lộ.

Sứ mệnh Kiến tạo cuộc sống số của Viettel

Trở lại với “chị Google”, anh Nguyễn Hoàng Hưng nói, công nghệ Text to Speech mà một tập đoàn toàn cầu như Google đưa ra chỉ nhằm đáp ứng nhu cầu giải đáp thông tin và không ưu tiên tiếng Việt. Trong khi đó, Viettel – một Tập đoàn của Việt Nam đã đi vào “thị trường ngách” và tạo ra được sản phẩm chất lượng hơn và có thể ứng dụng đa dạng hơn để phục vụ cho người Việt.

Trong nhiều ứng dụng của AI, Viettel đã chọn đưa vào ứng dụng thực tế 7 sản phẩm trí tuệ nhân tạo đầu tiên: Cyberbot – trợ lý ảo chăm sóc khách hàng, Báo nói, Hệ thống quản lý danh tiếng và thương hiệu REPUTA, Hệ thống hỗ trợ chẩn đoán ảnh nội soi, Hệ thống theo dõi và cảnh báo biến động diện tích rừng, Hệ thống chống tấn công từ chối dịch vụ DDOS và Hệ thống camera giám sát giao thông.

Việc phát triển các sản phẩm AI nói trên là những câu trả lời đầu tiên của Viettel cho bài toán “Kiến tạo cuộc sống số” tại Việt Nam. Không chỉ là sự chuyển mình thức thời theo xu hướng phát triển công nghệ trên thế giới, Viettel lựa chọn cho ra đời các sản phẩm trí tuệ nhân tạo có khả năng giải quyết những vấn đề thực tiễn cần thiết nhất của xã hội Việt Nam.

Doanh nghiệp cần chuyên nghiệp hóa, tiết kiệm chi phí trong chăm sóc khách hàng và quản lý truyền thông thương hiệu. Người dân cần dịch vụ y tế chất lượng cao. Tài nguyên rừng, tài nguyên môi trường của đất nước cần được bảo vệ. Chính phủ cần xây dựng hệ thống giao thông thông minh. Và mọi cá nhân, tổ chức đều cần được bảo vệ trong môi trường mạng….

Đó cũng là những điều chứng tỏ vị thế cùng trách nhiệm dẫn dắt ngành công nghệ thông tin của đất nước mà một Tập đoàn lớn như Viettel đặt trên vai. Bắt đầu từ 7 sản phẩm AI nói trên, Viettel có khát vọng trở thành điểm hội tụ và lan tỏa những sản phẩm ứng dụng công nghệ số đến mọi ngõ ngách của đời sống xã hội, cung cấp những giải pháp mang giá trị toàn cầu, đưa Việt Nam đồng hành với thế giới trong cuộc cách mạng 4.0.

Nguyễn Quỳnh - ictnews