Fictional robots like BB-8 in Star Wars look like geniuses next to today’s artificial intelligences.

APPLIED PHYSICS LABORATORY: Futurists worry about artificial intelligencebecoming too intelligent for humanity’s good. Here and now, however, artificial intelligence can be dangerously dumb. When complacent humans become over-reliant on dumb AI, people can die. The lethal track record goes from the Tesla Autopilot crash last year, to the Air France 447 disaster that killed 228 people in 2009, to the Patriot missiles that shot down friendly planes in 2003.

That’s particular problematic for the military, which, more than any other potential user, would employ AI in situations that are literally life or death. It needs code that can calculate the path to victory amidst the chaos and confusion of the battlefield, the high-tech Holy Grail we’ve calling the War Algorithm (click here for the full series). While the Pentagon has repeatedly promised it won’t buildkiller robots — AI that can pull the trigger without human intervention — people will still die if intelligence analysis software mistakes a hospital for a terrorist hide-out, a “cognitive electronic warfare” pod doesn’t jam an incoming missile, or if a robotic supply truck doesn’t deliver the right ammunition to soldiers running out of bullets.

That’s particular problematic for the military, which, more than any other potential user, would employ AI in situations that are literally life or death. It needs code that can calculate the path to victory amidst the chaos and confusion of the battlefield, the high-tech Holy Grail we’ve calling the War Algorithm (click here for the full series). While the Pentagon has repeatedly promised it won’t buildkiller robots — AI that can pull the trigger without human intervention — people will still die if intelligence analysis software mistakes a hospital for a terrorist hide-out, a “cognitive electronic warfare” pod doesn’t jam an incoming missile, or if a robotic supply truck doesn’t deliver the right ammunition to soldiers running out of bullets.

Second, the US military has bet on AI to preserve its high-tech advantage over rapidly advancing adversaries like Russia and China. Known as the Third Offset Strategy, this quest starts from the premise that human minds and artificial intelligence working together are more powerful than either alone. But all too often, humans and AI working together are actually stupider than the sum of their parts. Human-machine synergy is an aspiration, not a certainty, and achieving it will require a new approach to developing AI.

Matt Johnson

“If I look at all the advances in technology … one of the places I don’t think we’re making a lot of advances is in that teaming, in that interaction (of humans and AI),” saidMatt Johnson, a Navy pilot turned AI researcher, at a conference here last week. The solution requires “really changing the questions we’re asking when we design these systems,” he said. “It involves branching the design of these systems out of the world of just engineering… and into a bigger world that involves human factors and social implications, ethical implications.”

“You can’t engineer out stupid. People are always going to have that option,” Johnson said to laughter. “However, as engineers and designers you can certainly help them avoid going down that path.”

Paul Scharre

Unfortunately, engineers and designers often try to “solve” the problem of human error by minimizing human input, military futurist Paul Scharre told me in an interview. “When people design these systems, they’re trying to design the human away,” he said, “but if you want human-machine teaming” — the mantra of the Pentagon’s Offset Strategy — “you have to start with the human involved from day one.”

Yes, you need do a good job designing “the machine itself,” of course, Scharre said, but that’s not enough. “The system that you want to have to accomplish the task consists of the machine, the human, and the environment it’s in.”

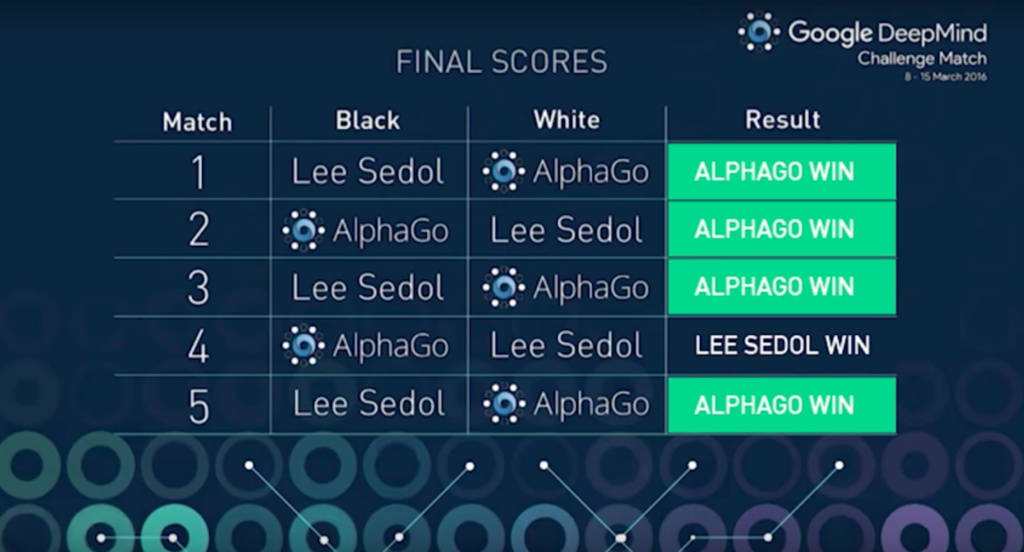

Google’s AlphaGo program decisively defeated champion human Go players.

The Ambiguity Problem

AI is beautifully suited for environments where everything is mathematical and clear; messy situations, not so much. For example, computers have handily beaten the best human players both at chess and Go. Both games are so subtle and complex that there are more potential moves than there are atoms in the universe. Google’s AlphaGo program has even been praised for creative play, coming up with strategies no human ever thought of.

But chess and Go are both literally black-and-white. What each piece can do is strictly defined. At any given moment, each piece is in a specific place on the grid, never in-between. And each move contributes either to victory or defeat.

Suchi Saria

AIs can analyze hundreds of past games and decide, “If I made this move, I often win. It’s a good move… If I often lose, it’s a bad move,” said Suchi Saria, a Johns Hopkins assistant professor, at the AI conference.There’s no ambiguity — no weather, no mud, no human emotion.

Things aren’t so straightforward, Saria said, if you apply artificial intelligence to a situation where cause and effect are often unclear. She researches the use of AI in medicine, but AI on battlefields would also apply. A human doctor can read between the lines of the reported symptoms to diagnose a difficult patient, or a human commander with what Clausewitz called coup d’oeil can intuit where the enemy is vulnerable, because of the so-far unique human ability to absorb enormous amounts of information and make the right choice even when much of the data is contradictory. AI can’t do either, yet.

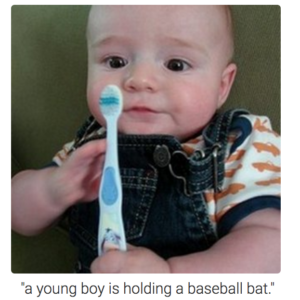

To teach AIs about ambiguous, complex situations, designers often use something called machine learning, but the technique is limited. Sometimes, AIs learn the wrong thing, like the Microsoft’s Tay chatbot that started making racist tweets within hours of going online, or the image-recognition software that tagged a photo of a baby with a toothbrush as “a young boy is holding a baseball bat.” Sometimes AIs learn the right thing about the specific situation that they’ve been trained on but can’t apply it to other, similar situations.

An example of the shortcomings of artificial intelligence when it comes to image recognition. (Andrej Karpathy, Li Fei-Fei, Stanford University)

“How well does it generalize, generalize to new environments that are a little bit different from the training data?” Suria asked. In her research, she found, “as soon as you start taking the testing environment and deviating from your training environment, your generalization suffers.”

How do you design an AI to guard against such failures? “They need to know when they don’t know,” Suria said. “They need to identify when is it that they’re observing something that is new and different from what they’ve seen before, and be able to say to the (human supervisor), ‘I’m not so good at that.'”

“Automation is pretty brittle,” Scharre told me. “If you can understand well the parameters of the problem you’re trying to solve, like chess — chess is a pretty bounded problem — you can program something to do that better than a human….When you go outside the bounds of the program behavior, (however) it goes from super smart to super dumb in an instant.”

Tony Stark (Robert Downey Jr.) relies on the JARVIS artificial intelligence to help pilot his Iron Man suit. (Marvel Comics/Paramount Pictures)

Coming Soon

So how do you keep artificial intelligence — and the humans using it — from going stupid at the worst possible time? We explore the problems and solutions in depth in the next two stories of our series on military AI, The War Algorithm:

Fumbling the Handoff, running Monday, will look at getting AI to ask for human input in ambiguous situations — like the Tesla crash and the Patriot friendly-fire incident — without the human getting caught off guard, panicking, and making things worse — which is what killed everyone on Air France 447.

Learning to Trust will look at making AI more transparent — and perhaps more human-like — so human beings will trust and use them.

No comments:

Post a Comment